Nginx + Image Archive

DISCLAIMER! We make no claims or guarantees of this approach's security. If in doubt, enlist the help of an expert and conduct proper audits.

At a certain point, you may want others to have access to your instance of the OHIF Viewer and its medical imaging data. This post covers one of many potential setups that accomplish that. Please note, noticably absent is user account control.

Do not use this recipe to host sensitive medical data on the open web. Depending on your company's policies, this may be an appropriate setup on an internal network when protected with a server's basic authentication. For a more robust setup, check out our user account control recipe that builds on the lessons learned here.

Overview#

Our two biggest hurdles when hosting our image archive and web client are:

- Risks related to exposing our PACS to the netowrk

- Cross-Origin Resource Sharing (CORS) requests

Handling Web Requests#

We mittigate our first issue by allowing Nginx to handle incoming web requests. Nginx is open source software for web serving, reverse proxying, caching, and more. It's designed for maximum performance and stability -- allowing us to more reliably serve content than Orthanc's built-in server can.

More specifically, we accomplish this by using a

reverse proxy to retrieve

resources from our image archive (Orthanc), and when accessing its web admin.

A reverse proxy is a type of proxy server that retrieves resources on behalf of a client from one or more servers. These resources are then returned to the client, appearing as if they originated from the proxy server itself.

CORS Issues#

Cross-Origin Resource Sharing (CORS) is a mechanism that uses HTTP headers to

tell a browser which web applications have permission to access selected

resources from a server at a different origin (domain, protocol, port). IE. By

default, a Web App located at http://my-website.com can't access resources

hosted at http://not-my-website.com

We can solve this one of two ways:

- Have our Image Archive located at the same domain as our Web App

- Add appropriate

Access-Control-Allow-*HTTP headers

This solution uses the first approach.

You can read more about CORS in this Medium article: Understanding CORS

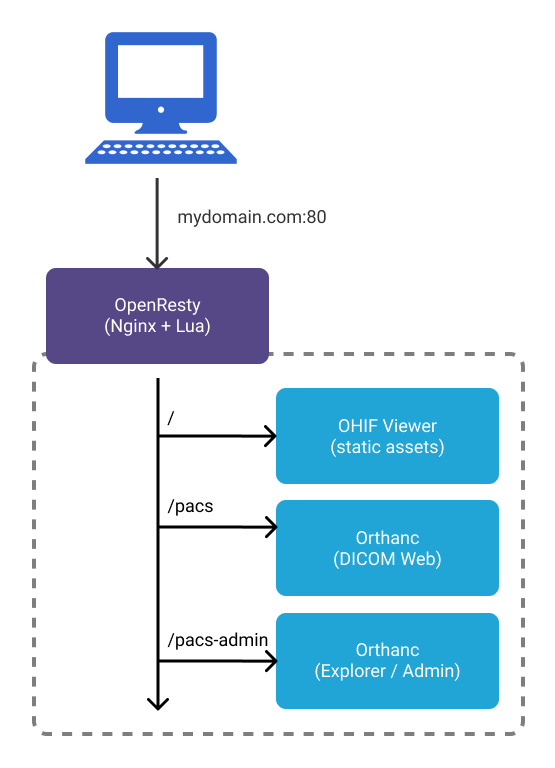

Diagram#

This setup allows us to create a setup similar to the one pictured below:

- All web requests are routed through

nginxon ourOpenRestyimage /pacsis a reverse proxy fororthanc'sDICOM Webendpoints/pacs-adminis a reverse proxy fororthanc's Web Admin- All static resources for OHIF Viewer are served up by

nginxwhen a matching route for that resource is requested

Getting Started#

Requirements#

Not sure if you have docker installed already? Try running docker --version

in command prompt or terminal

Setup#

Spin Things Up

- Navigate to

<project-root>/docker/OpenResty-Orthancin your shell - Run

docker-compose up

Upload Your First Study

- Navigate to

http://127.0.0.1/pacs-admin - From the top right, select "Upload"

- Click "Select files to upload..." (DICOM)

- Click "Start the upload"

- Navigate back to

http://127.0.0.1/to view your studies in the Study List

Troubleshooting#

Exit code 137

This means Docker ran out of memory. Open Docker Desktop, go to the advanced

tab, and increase the amount of Memory available.

Cannot create container for service X

Use this one with caution: docker system prune

X is already running

Stop running all containers:

- Win:

docker ps -a -q | ForEach { docker stop $_ } - Linux:

docker stop $(docker ps -a -q)

Configuration#

After verifying that everything runs with default configuration values, you will likely want to update:

- The domain:

http://127.0.0.1

OHIF Viewer#

The OHIF Viewer's configuration is imported from a static .js file. The

configuration we use is set to a specific file when we build the viewer, and

determined by the env variable: APP_CONFIG. You can see where we set its value

in the dockerfile for this solution:

ENV APP_CONFIG=config/docker_openresty-orthanc.js

You can find the configuration we're using here:

/public/config/docker_openresty-orthanc.js

To rebuild the webapp image created by our dockerfile after updating the

Viewer's configuration, you can run:

docker-compose buildORdocker-compose up --build

Other#

All other files are found in: /docker/OpenResty-Orthanc/

| Service | Configuration | Docs |

|---|---|---|

| OHIF Viewer | dockerfile | You're reading them now! |

| OpenResty (Nginx) | /nginx.conf | lua-resty-openidc |

| Orthanc | /orthanc.json | Here |

Next Steps#

Deploying to Production#

While these configuration and docker-compose files model an environment suitable for production, they are not easy to deploy "as is". You can either:

- Manually recreate this environment and deploy built application files OR

- Deploy to a cloud kubernetes provider like Digital Ocean OR

- Find and follow your preferred provider's guide on setting up swarms and stacks

Adding SSL#

Adding SSL registration and renewal for your domain with Let's Encrypt that terminates at Nginx is an incredibly important step toward securing your data. Here are some resources, specific to this setup, that may be helpful:

While we terminate SSL at Nginx, it may be worth using self signed certificates for communication between services.

Use PostgresSQL w/ Orthanc#

Orthanc can handle a large amount of data and requests, but if you find that

requests start to slow as you add more and more studies, you may want to

configure your Orthanc instance to use PostgresSQL. Instructions on how to do

that can be found in the

Orthanc Server Book, under

"PostgreSQL and Orthanc inside Docker"

Improving This Guide#

Here are some improvements this guide would benefit from, and that we would be more than happy to accept Pull Requests for:

- SSL Support

- Complete configuration with

.envfile (or something similar) - Any security issues

- One-click deploy to a cloud provider

Resources#

Misc. Helpful Commands#

Check if nginx.conf is valid:

docker run --rm -t -a stdout --name my-openresty -v $PWD/config/:/usr/local/openresty/nginx/conf/:ro openresty/openresty:alpine-fat openresty -c /usr/local/openresty/nginx/conf/nginx.conf -tInteract w/ running container:

docker exec -it CONTAINER_NAME bash

List running containers:

docker ps

Referenced Articles#

For more documentation on the software we've chosen to use, you may find the following resources helpful:

For a different take on this setup, check out the repositories our community members put together: